AI Is Supercharging Disinformation Warfare

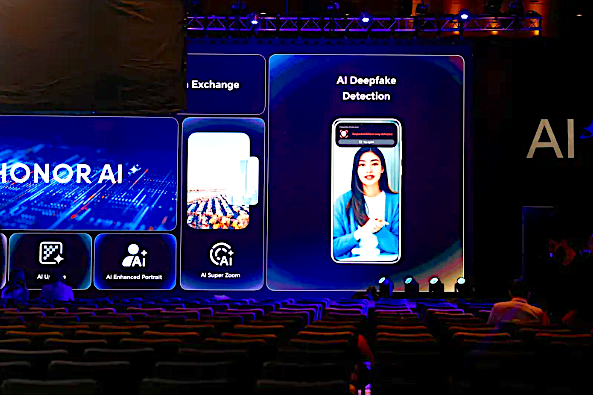

Banners displaying a new AI deepfake detector at a demonstration in Barcelona, Spain, earlier this year. Photo by Bruna Casas / Reuters

Foreign Affairs

In June, the secure Signal account of a European foreign minister pinged with a text message. The sender claimed to be U.S. Secretary of State Marco Rubio with an urgent request. A short time later, two other foreign ministers, a U.S. governor, and a member of Congress received the same message, this time accompanied by a sophisticated voice memo impersonating Rubio. Although the communication appeared to be authentic, its tone matching what would be expected from a senior official, it was actually a malicious forgery—a deepfake, engineered with artificial intelligence by unknown actors. Had the lie not been caught, the stunt had the potential to sow discord, compromise American diplomacy, or extract sensitive intelligence from Washington’s foreign partners.

This was not the last disquieting example of AI enabling malign actors to conduct information warfare—the manipulation and distribution of information to gain an advantage over an adversary. In August, researchers at Vanderbilt University revealed that a Chinese tech firm, GoLaxy, had used AI to build data profiles of at least 117 sitting U.S. lawmakers and over 2,000 American public figures. The data could be used to construct plausible AI-generated personas that mimic those figures and craft messaging campaigns that appeal to the psychological traits of their followers. GoLaxy’s goal, demonstrated in parallel campaigns in Hong Kong and Taiwan, was to build the capability to deliver millions of different, customized lies to millions of individuals at once.

ADDITIONAL NEWS FROM THE INTEGRITY PROJECT